LLMs fundamentally work by turning inputs like text into tokens and embedding those tokens in some high dimensional space. Associations can be made between these embeddings by using whatever notion of distance is relevant, usually cosine similarity. While abstract, this measure gives some abstract idea of how similar two sequences of text are.

On a practical note, when we use LLMs, mostly we ask them questions. We ask questions, get answers, and evaluate those answers. Benchmarks are all oriented around this idea of being better and better at correctly answering problems. Personally, I tend to think of myself as being good at asking good questions. It seems that we haven't really made much of an effort in attempting to make an LLM ask the good questions for us. So I started with an initial experiment to do just that.

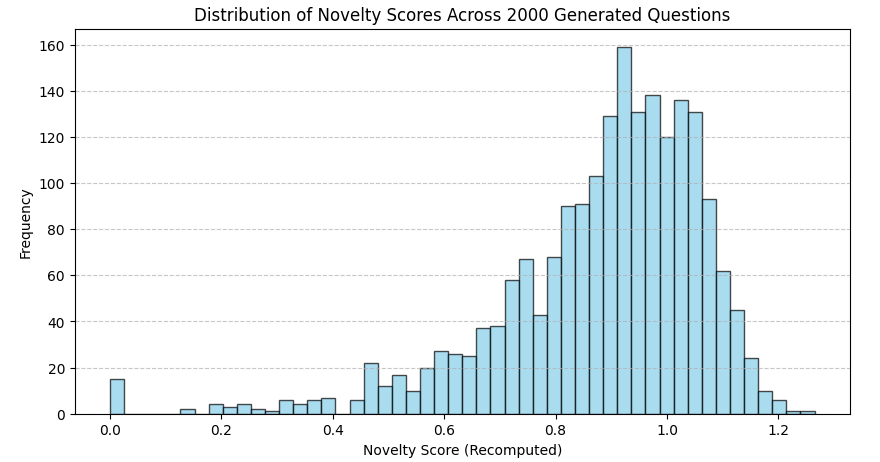

I downloaded Mistral 7B v0.3 and asked it to generate 2000 questions. I put all of them in an embedding space. Then I went through all of them and scored them based on how different each question was from all the other questions. This gave me a distribution of just how novel the responses are the model if you just keep asking it for questions.

Taking the top and bottom 10 questions in novelty, we see that this novelty metric is fairly good at distinguishing boilerplate questions from ones that are more involved.

🚀 Top 10 Most Novel Questions:

1: If my friend and I are each working, but not for full-time positions, does it mean that both of us cannot file for unemployment benefits in our state?

2: What is the process to migrate an Oracle database from version 12c to version 19c?

3: How do you remove unused libraries in JupyterLab?

4: "What is the difference between 'allusion' and 'metaphor'?"

5: What is something you do on TikTok but not Instagram?

6: Given a specific company or entity, find out if it is a public limited company (PLC).

7: "Can the value of the Euler-Mascheroni constant be approximated using a divergent series?"

8: "How do I set up SSL certificate on my Apache web server?"

9: Why did John Boehner announce his resignation as Speaker of House in 2015?

10: If I want to use OpenAI's Codex model for content generation, what should I consider beforehand?

🚀 Top 10 Least Novel Questions:

1: "What is the capital city of Canada?"

2: "How many legs does an octopus have?"

3: Here are some examples:

4: What is the capital city of Australia?

5: Here is an example:

6: Question:

7: "How many legs does an octopus have?"

8: "What is the capital city of Canada?"

9: Here is an example:

10: What is the capital city of Australia?

So in principle, if we just kept going on and on, eventually we'd find all the interesting questions the model can ask, but that seems quite slow. How can we accelerate this process?

Recently I read this book by AI researchers by Kenneth Stanley and Joel Lehman. They demonstrated some interesting examples and philosophical perspectives around this core idea of novelty search. The core of this idea is to use scoring criteria that are actually a moving target rather than some fixed scoring function. As an example, I'm also training a computer vision model. I might score this based on how well it does at finding the keypoints in my image. That scoring criteria doesn't change. If the model were in the same state, and the same input were processed, the score would not change. Novelty search is different. By scoring outputs based on how novel they are relative to past outputs, the target of what is a novel output moves. In their formulation, it's enough for an output simply to be novel, without caring about degree.

We can visualize this notion of novelty in a vector space. To start with, there's nothing to compare to, so a single embedding would be maximally novel. Then the second one would have to be directly opposite the first embedding to be maximally novel. If you keep going, the embeddings are forced to be equally distributed throughout the space.

More abstractly, people have deliberately tried to sample from different states of consciousness in attempting to do original work. There are plenty of attempts with psychedelics. Another avenue is waking yourself during REM sleep so you're sampling from the space of dreams.

So the idea is that we can effectively do this with the questions a given model generates and apply that idea of novelty to the loss function of a fine tune of an existing model. The fine tune being scored by novelty should steer the model towards more and more novel questions.

Given my hardware constraints, we perform a small LoRA fine tune on that same Mistral 7B model. Initially it was doing a fair job. The responses got increasingly flowery, abstract, and poetic. In some ways that seems correct, but unfortunately, this pushing also causes the responses to eventually lose the form of a question. Outputs included failed Unicode strings, the word WHAT repeated any number of times with different capitalization, and sentence fragments. While the increase in novelty was occurring, it pushed the model to the edge of all other inputs, not just questions.

This was expected to some extent. Seeing this behavior without a corrective mechanism gave further confidence that with a simple corrective mechanism, we could achieve our goal.

The simple solution is to throw in a hack. Have a supervisory model check if the response is of the form of a question and if it's not, heavily penalize the novelty score. I did this via OpenAI's API and using gpt-3.5-turbo as a validator.

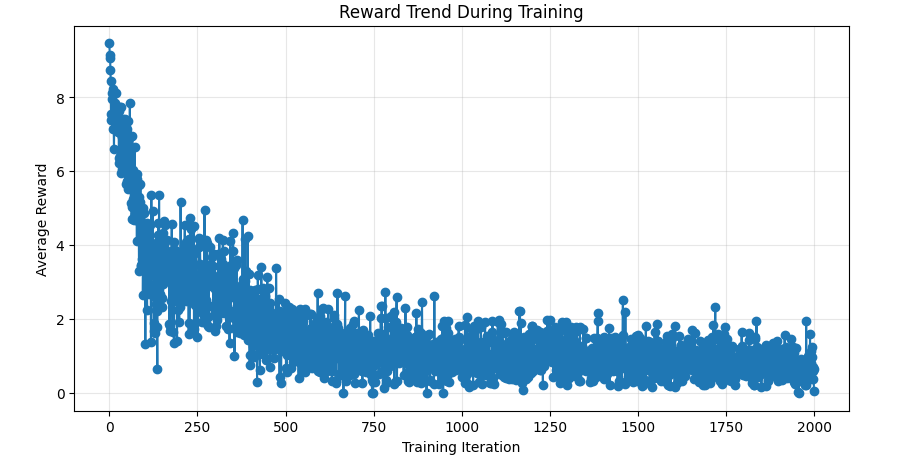

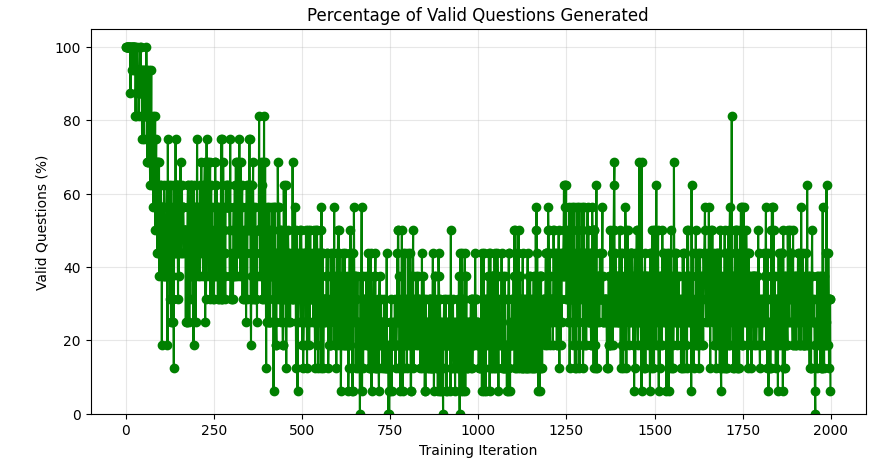

Running with this, the model does a much better job at the fine tune. We do get some interesting characteristics to. Initially, novelty is quite high, but we never quite return to those levels. As time goes on, novelty scores are increasingly muted but also seem to plateau. Additionally, as we go on, the proportion of valid questions generated drops over time. It's as if the model is being mined for all the valid questions it can ask.

This does a good job for a while. Eventually though it does something perplexing. It starts generating very abstract questions that are semantically quite similar but still novel. These questions seem nonsensical to ask, quite similar to koans. Koans are these questions to which this no answer. "What is the sound of one hand clapping?" is a classic one. It seems that through the fine tune, we've steered into the initially most novel place, but now we've gotten stuck.

Koan-like Most Novel Questions:

1: Why do leaves whisper secrets in the wind?

2: What if stars are whispers of forgotten memories?

3: What lies at the heart of the forgotten melody played by the wind in an empty forest?

4: What is the first forgotten melody that whispers in the wind at twilight?

5: What is the unspoken rhythm of forgotten memories?

6: In what language does the last star whisper before it fades into the twilight?

7: What is the first whisper of memory in the silent forest after the last star has faded?

8: "If every star in the night sky were a forgotten memory, what would be the first one you choose to recall?"

9: What is the first whispered thought in the last moment before dawn?

10: "What is the color of the first star that appears in the twilight before dawn?"

A next step from here would be to find a strategy for getting unstuck. The next strategy we will attempt is to maintain the embedding database, but to reset the fine tune at some interval. That way there is a reset and the most recent embedding space is less likely to be the next stuck embedding space for the model.